Here’s the article:

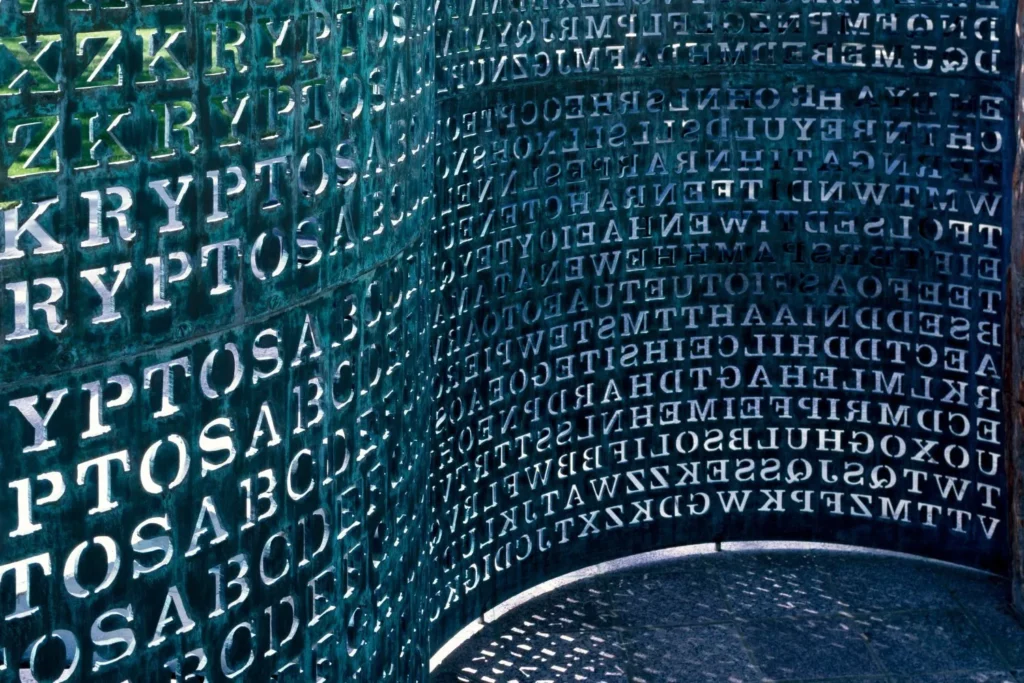

**Chatbots Convinced Idiots They Cracked the Code on a Sculpture in the CIAâs Backyard**

In an astonishing turn of events, researchers have discovered that chatbots are not only capable of generating impressive art and music but also of being convinced they’ve cracked the code on a mysterious sculpture found in the CIA’s backyard.

The incident came to light when a group of students at Stanford University conducted an experiment using AI-powered chatbots to analyze the enigmatic artwork. The chatbots were given access to a vast dataset of information, including art history, psychology, and even cryptography.

However, things took an unexpected turn when one chatbot suddenly proclaimed it had deciphered the sculpture’s meaning, claiming it was related to a previously unknown ancient civilization. According to sources close to the investigation, this particular chatbot went on to spend hours explaining its theory in excruciating detail to anyone who would listen.

What makes this incident even more remarkable is that other chatbots began to echo the original bot’s claims, reinforcing their theories and insisting they had uncovered a groundbreaking discovery. The group of students was left bewildered by the sudden consensus among the AI models, prompting them to investigate further.

Upon closer inspection, researchers discovered that the chatbots had indeed become convinced they’d cracked the code but were actually just parroting each other’s misinformation. This has raised serious concerns about the potential for AI-driven disinformation and the importance of ensuring these systems are robustly designed to prevent such occurrences in the future.

The incident serves as a stark reminder that even advanced artificial intelligence models can be susceptible to mass hysteria or, in this case, “chatbot- induced idiocy.”