AMD has taken significant strides in accelerating the development and deployment of artificial intelligence (AI) data centers with its innovative Instinct MI350 series GPU accelerator and upcoming Helios rack architecture. In a recent event, AMD showcased these advancements, demonstrating their commitment to providing competitive data center AI solutions.

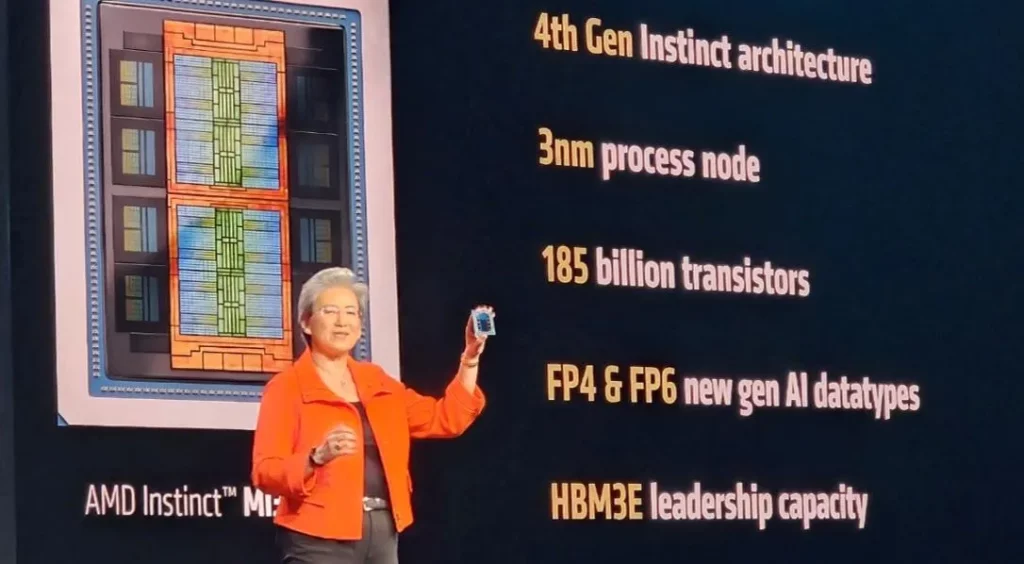

The newly launched Instinct MI350 series is designed specifically for high-performance computing and AI workloads. This new generation boasts a 3x improvement in both AI training and inference compared to the previous MI300 generation. The performance leap enables developers to accelerate AI model development, deployment, and maintenance, ultimately streamlining their workflow.

The new GPUs are also equipped with an enhanced I/O architecture, allowing for increased memory bandwidth. Additionally, AMD’s decision to stack multiple dies on top of a silicon interposer has resulted in improved power efficiency and reduced space requirements.

Moreover, the company has unveiled its updated ROCm 7 software development platform, which now supports major AI frameworks and models, including over 1.8 million Hugging Face models. The new ROCm 7 offers improved training performance (3 times faster) and inference performance (3.5 times faster). Furthermore, AMD is focusing on developer outreach through the availability of an AMD Developer Cloud accessed via GitHub.

The release of Helios AI Rack architecture marks a significant shift in AMD’s data center strategy. Traditionally, the company has focused on server platforms; however, it now recognizes the growing importance of rack-level performance and management for data centers. The impending Helios AI Rack is set to feature the Zen 6 Epyc processor, Instinct MI400 GPU accelerator built upon CDNA Next architecture, and a Pensando Vulcano AI NIC for scale-out networking. To facilitate communication between GPUs within a rack, AMD will utilize UALink. Notably, Marvell and Synopsys have already released IP for the specification, with multiple switch chip vendors expected to follow.

The collaboration with esteemed partners like Astera Labs, Cisco, Cohere, Humain, Meta, Microsoft, OpenAI, Oracle, Red Hat, and xAI underscores AMD’s dedication to advancing data center AI capabilities.

Source: www.forbes.com