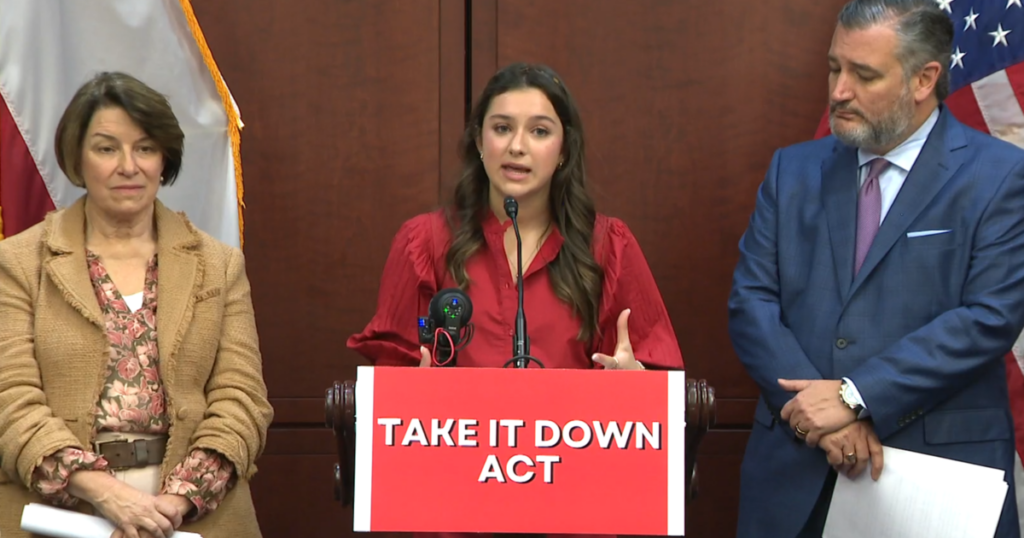

Teen victim of deepfake pornography urges Congress to pass ‘Take It Down Act’

A 15-year-old Texas girl has spoken out about the trauma she endured after a classmate created AI-generated “deepfake” pornography and shared it online.

Anna McAdams, Elliston Berry’s mother, said that her daughter was devastated when she discovered that someone had taken a picture from her Instagram account, run it through an artificial intelligence program to remove her dress, and then sent the digitally altered image on Snapchat.

“It could have easily been my daughter,” San Francisco Chief Deputy City Attorney Yvonne Mere said, adding that this is not just about technology or AI but sexual abuse.

In a statement, Snap told CBS News that they care deeply about their community’s safety and well-being. Sharing nude images, including those of minors, whether real or AI-generated, is a clear violation of their Community Guidelines.

A bill, called the “Take It Down Act”, has passed the Senate and is now attached to a larger government funding bill awaiting a vote in the House.

Source: www.cbsnews.com